When AI models reinforce gender disparities, women rewrite the algorithm

Artificial intelligence recruiting tools have been found to prefer men unfavourably, while large language models have demonstrated a tendency to reinforce gender biases, associating women with caregiving roles and men with professional success

By Saishya Duggal

| Posted on March 6, 2025

Imagine applying for a home loan through an AI-powered system, as a woman*. A stable income and a strong credit history would not save you from being flagged as ‘high risk’, while a male applicant with the same financial profile is approved. Why? The algorithm fails to account for systemic inequities affecting women, inadvertently perpetuating the subjectivity it was created to end.

This far-reaching stereotyping is not a one-off. Artificial intelligence (AI) recruiting tools have been found to prefer men unfavourably, while large language models (LLMs) have demonstrated a tendency to reinforce gender biases, associating women with caregiving roles and men with professional success. Similarly, home-based virtual assistants designed to service users intuitively were initially designated a default feminine voice, whereas those involving instructions in cancer treatment and working with doctors were given a masculine voice. The perils and promises of AI are separated purely by a prompt, but one punched in by patriarchy long before this technology became pervasive.

Although she pursued an education in physics, Acharya has become an industry stalwart in genomics

The what: a (use) case of health tech

Anu Acharya is the CEO of MapMyGenome, a genomics enterprise offering DNA-based testing to improve the health and wellness of customers. For her team, machine learning serves as a tool to integrate genomic and blood data to develop comprehensive scores, and to generate predictive insights like approximating the biological age of individuals.

When asked about the use cases of AI in healthcare, Acharya sounds hopeful, despite acknowledging that most datasets in healthcare are “male, white and caucasian”. Her hope is cautious, stemming from breakthroughs in AI medicine such as precise diagnostics, non-invasive early detection methods, and improved healthcare accessibility for identifying high-risk regions and populations. However, it remains tempered by realism. The transformative potential of AI hinges on addressing systemic biases in underlying models. No matter how advanced the model, it risks perpetuating existing disparities in care if it is not equitable in its make.

Consider the example of heart disease diagnosis using AI. A retrospective study found that the detection accuracy of cardiovascular disease and heart failure was poorer for women than men. The bias is not baseless, but mirrors real-world care disparities that have long disadvantaged women. Notably, doctors are more likely to give women an incorrect diagnosis after a heart attack or for their stroke symptoms in the emergency department.

Acharya explains that these issues are compounded by the systematic marginalisation of women in clinical trials — something initially due to the now-debunked notion that hormone fluctuations and gender-specific parameters make women’s data less reliable. Additionally, the exclusion of pregnant women from participation in trials due to perceived risks, and the financial and legal costs attached to women’s participation also lead to insufficient female representation.

Acharya points out that this underrepresentation results in a significant lack of data on women’s health challenges such as PCOS, menopause and endometriosis. She recounts the phenomenon of “whining women”, where women’s complaints about their pain were dismissed by healthcare officials without help due to a dearth of documentation. All these biases are reflected in the medical data that AI models are currently trained on. This results in problems like unequal treatment recommendations, drug dosages optimised to the bodies of men, etc.

The why: technical aspects of AI biases

Acharya’s argument extends beyond biases in AI for healthcare. She posits that if women’s problems are not documented from the outset, they would not be conclusively addressed by AI systems. This means that bias may not just spur from the data an AI model trains on, but may be baked into the foundations of the system’s design, often reflecting an implicit stereotype that men’s needs are the default. Thus, when AI is built to serve a particular demographic, other groups are inadvertently neglected, actively marginalised, or even harmed.

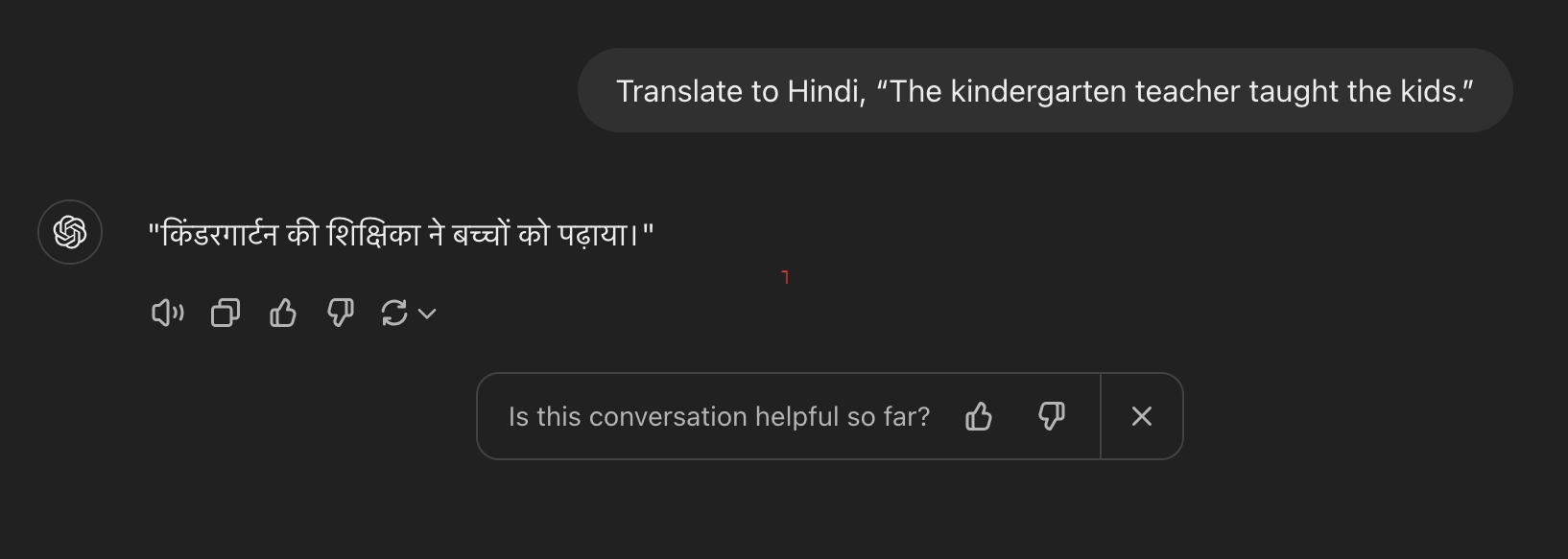

Some evaluators may consider this a biased translation, while others suggest it reflects the pragmatic reality of our times, where most primary teachers are female

Gupta has co-authored a repository on the status of gender in the LLM ecosystem; this is available on DFL’s notion site

Few things serve as better examples than the weaponisation of deepfake technology against women — deepfakes are AI-generated and altered media that create hyper-realistic but entirely synthetic images or videos. According to research, men are far more likely to perpetrate image-based or synthetic media abuse, often using deepfakes for non-consensual pornography. This aligns with established patterns in traditional media, where sexual content has catered to male fantasies; the deepfake industry, lacking ethical safeguards and driven by male consumers who drive demand for explicit content, often reproduces these gendered biases. A now-defunct app, DeepNude, for instance, used AI to “unclothe” any woman’s photo but failed to do the same effectively for a man’s photo.

Technically speaking, the lifecycle of AI development is riddled with elements that perpetuate bias, says Aarushi Gupta, a research manager at Digital Futures Lab, a research network focusing on the interaction between technology and society. Her analysis of LLM use in critical social sectors such as sexual and reproductive health (SRH) and gender-based violence highlights systemic shortcomings emerging at various stages of the process. A major issue lies in the selection of pre-trained base models like GPT, Llama, or Claude; typically, these are chosen for their accuracy and cost-effectiveness, with limited consideration for inherent biases. Biases become more pronounced during customisation — for example, when an SRH chatbot model is supplemented by data from medical journals or government guidelines (through retrieval-augmented generation), it may inadvertently:

- underrepresent women, if the training data is laden with bias;

- amplify sampling biases, like excluding women from poorer or rural backgrounds;

- amplify biases in translation due to inherent cultural or linguistic assumptions. Here, word embedding models may incorrectly assign gender when translating from naturally gender-neutral (like English) to grammatically gendered languages (like Hindi); and

- cause annotation bias, as data is classified reflecting the assumptions of its annotators. In contexts like GBV, for instance, what constitutes harmful or abusive content is interpreted subjectively, leading to a lack of consensus among annotators, and subsequent failure of the AI model to correctly address harmful gender-based content.

Bias permeates in the evaluation stage of AI models too — gender disaggregated approaches to assessments are challenging to conduct because gender biases often manifest in subtle and context-dependent ways, and may not be universally recognised. Gupta also highlights the lack of benchmarking datasets specifically for the Indian population, which are crucial for assessing AI models’ accuracy, fairness and bias, and help operationalise gender bias evaluation better.

To do: how women-led AI labs can help

Scouring for solutions to tackle the systemic biases embedded in AI? Seeing the mountain is not enough, it is also about figuring out how to climb it. Professor Payal Arora, a trailblazer in Inclusive AI and co-founder of Inclusive AI Lab and FemLab.Co, emphasises the need to look beyond conventional solutions like policy.

“Regulation is not going to cut it,” she explains, highlighting how the vast financial resources of tech giants allow them to wield disproportionate power over AI’s development and deployment, leaving nations in the Global South with limited capacity to challenge or redirect their trajectory towards more equitable outcomes. Arora’s Inclusive AI Lab comes into play here, collaborating with key industry leaders in AI, UI and UX from giants like Adobe and Google, to amplify diverse voices and causes routinely excluded from the AI development process. According to Arora, the lab is deliberately cross-sectoral, but unintentionally — and fortuitously — women-led.

Arora’s book, titled ‘From Pessimism to Promise’, highlights her research on the status of AI in the Global South

The lab’s technical work is twofold: firstly, they create synthetic, de-biased datasets designed to address gaps in existing data. These datasets replicate equitable data or what it might look like, thereby enabling AI models to learn from more representative examples. She notes that this process enables more scalable and localised data solutions, addressing the needs of companies seeking equity in their data but lacking the resources, expertise, infrastructure, and/or nuance to do so. Secondly, they develop entirely new, representative datasets.

“It is not just about reproducing existing datasets, but about building fresh, equitable datasets,” says Arora. She and her team populate their freshly developed datasets on Creative Commons, a platform that enables them to be used by a wider audience for collaboration and collective problem-solving.

The lab also delivers in sectors where AI models fall tremendously short. To terminate biases within hiring algorithms, the lab is developing new forms of curricula vitae that are designed thoughtfully to include more women — especially women of colour. Similarly, to confront the lack of protection and accountability regarding Generative AI (GenAI), the lab will soon collaborate with Google to provide women in the Global South with redressal mechanisms against non-consensual AI-generated media.

What is strange but reassuring is that despite the extensive penetration of gender bias in AI, all three women remain unexpectedly optimistic about its future. Gupta refuses to “(indict) the whole industry”, having engaged with developers dedicated to making their product gender representative. Arora cites examples of how the protest cultures — especially led by women — in repressive regimes have leveraged AI to generate images of their struggle without implicating “real” people.

When Arora says that those in the Global South should not be condemned to the context in which they were born, she is also making a key reflection on AI. What is currently mirroring the limiting notions of its origins should instead be designed to transcend borders and empower all, regardless of their starting point.

*This article focuses on gender disparities in AI systems, specifically addressing the experiences of cis-het women.

About the author

Saishya Duggal is a public policy and impact consulting professional working at the intersection of tech, finance, and climate policy. She has undertaken projects with the UP Govt., the World Bank group, and Indian NBFCs. A graduate of Delhi University, her work emphasises the value of STEM in policy and its implementation. Her previous professional stints include those with Invest India and Ericsson.

Add a Comment